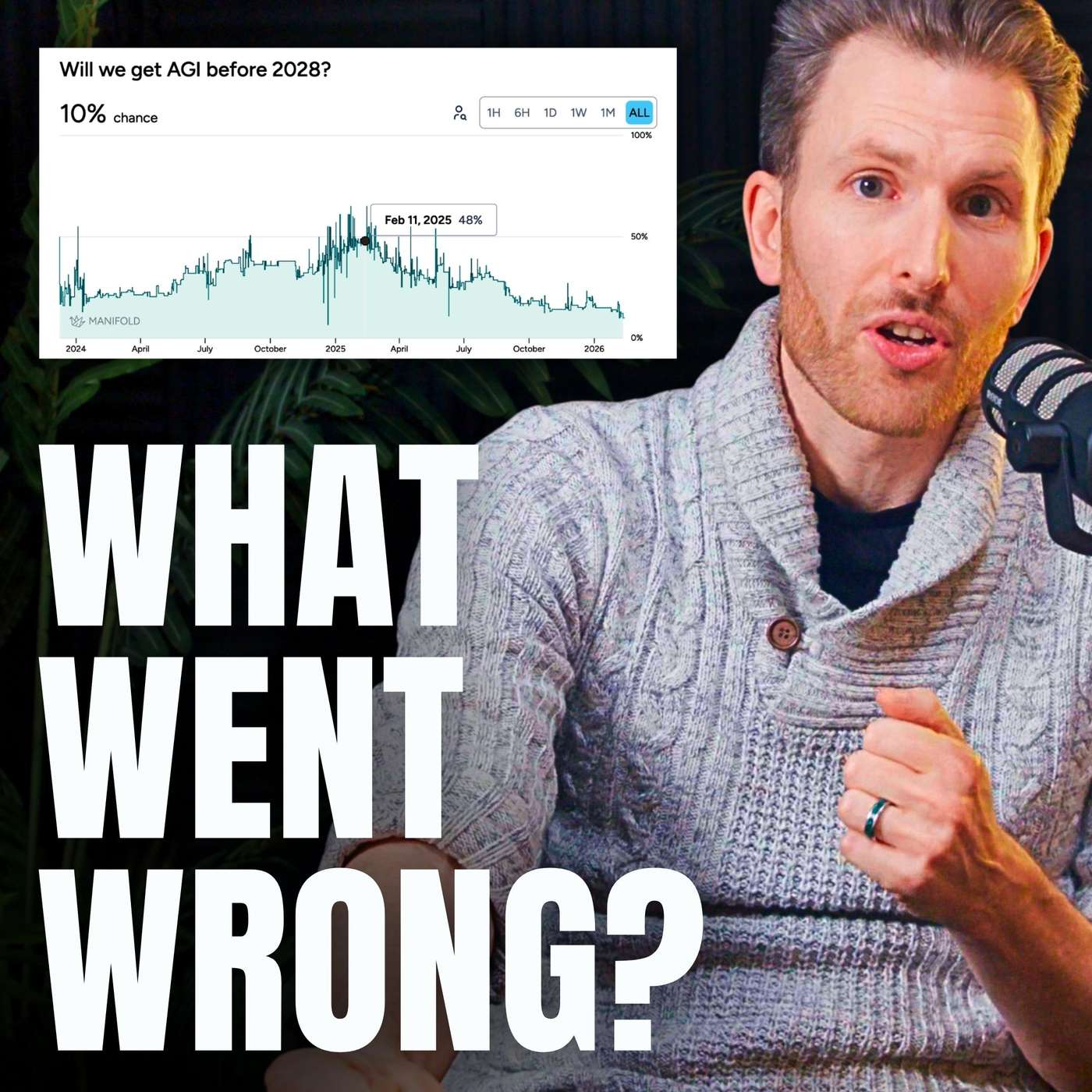

The AI industry's exponential growth in consuming compute, electricity, and talent is unsustainable. By 2032, it will have absorbed most available slack from other industries. Further progress will require potentially un-fundable trillion-dollar training runs, creating a critical period for AGI development.

Related Insights

The primary bottleneck for scaling AI over the next decade may be the difficulty of bringing gigawatt-scale power online to support data centers. Smart money is already focused on this challenge, which is more complex than silicon supply.

The focus in AI has evolved from rapid software capability gains to the physical constraints of its adoption. The demand for compute power is expected to significantly outstrip supply, making infrastructure—not algorithms—the defining bottleneck for future growth.

Pat Gelsinger contends that the true constraint on AI's expansion is energy availability. He frames the issue starkly: every gigawatt of power required by a new data center is equivalent to building a new nuclear reactor, a massive physical infrastructure challenge that will limit growth more than chips or capital.

Despite staggering announcements for new AI data centers, a primary limiting factor will be the availability of electrical power. The current growth curve of the power infrastructure cannot support all the announced plans, creating a physical bottleneck that will likely lead to project failures and investment "carnage."

The current AI investment surge is a dangerous "resource grab" phase, not a typical bubble. Companies are desperately securing scarce resources—power, chips, and top scientists—driven by existential fear of being left behind. This isn't a normal CapEx cycle; the spending is almost guaranteed until a dead-end is proven.

The AI buildout won't be stopped by technological limits or lack of demand. The true barrier will be economics: when the marginal capital provider determines that the diminishing returns from massive investments no longer justify the cost.

Most of the world's energy capacity build-out over the next decade was planned using old models, completely omitting the exponential power demands of AI. This creates a looming, unpriced-in bottleneck for AI infrastructure development that will require significant new investment and planning.

According to Arista's CEO, the primary constraint on building AI infrastructure is the massive power consumption of GPUs and networks. Finding data center locations with gigawatts of available power can take 3-5 years, making energy access, not technology, the main limiting factor for industry growth.

Musk argues that by the end of 2024, the primary constraint for large-scale AI will no longer be the supply of chips, but the ability to find enough electricity to power them. He predicts chip production will outpace the energy grid's capacity, leaving valuable hardware idle and creating a new competitive front based on power generation.

As hyperscalers build massive new data centers for AI, the critical constraint is shifting from semiconductor supply to energy availability. The core challenge becomes sourcing enough power, raising new geopolitical and environmental questions that will define the next phase of the AI race.