Research from Duncan Watts shows the bigger societal issue isn't fabricated facts (misinformation), but rather taking true data points and drawing misleading conclusions (misinterpretation). This happens 41 times more often and is a more insidious problem for decision-makers.

Related Insights

Inaccurate headline statistics are not just academic; they actively shape policy. The misleading Consumer Price Index (CPI), for example, is used to determine Social Security benefits, food assistance eligibility, and state-level minimum wages. This means policy decisions are based on a distorted view of economic reality, leading to ineffective outcomes.

A significant portion (30-50%) of statistics, news, and niche details from ChatGPT are inferred and not factually accurate. Users must be aware that even official-sounding stats can be completely fabricated, risking credibility in professional work like presentations.

The erosion of trusted, centralized news sources by social media creates an information vacuum. This forces people into a state of 'conspiracy brain,' where they either distrust all information or create flawed connections between unverified data points.

A content moderation failure revealed a sophisticated misuse tactic: campaigns used factually correct but emotionally charged information (e.g., school shooting statistics) not to misinform, but to intentionally polarize audiences and incite conflict. This challenges traditional definitions of harmful content.

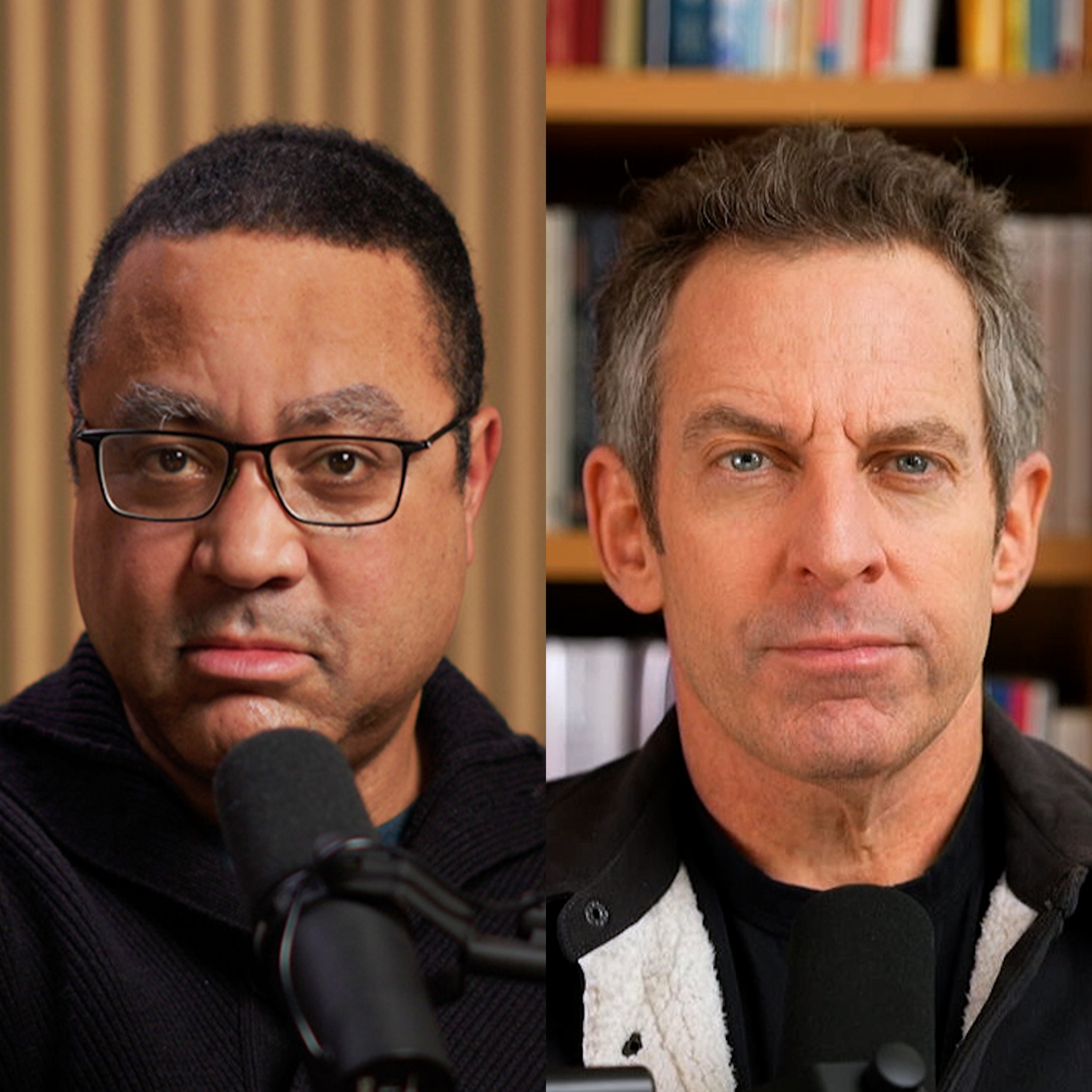

John McWhorter identifies a key error post-George Floyd: the widespread belief that police frequently kill unarmed Black men. He notes public estimates are off by orders of magnitude from the actual data (around 10-15 per year). This statistical illiteracy, amplified by viral videos, created a false narrative impervious to facts.

The modern media ecosystem is defined by the decomposition of truth. From AI-generated fake images to conspiracy theories blending real and fake documents on X, people are becoming accustomed to an environment where discerning absolute reality is difficult and are willing to live with that ambiguity.

A recent poll shows over half of U.S. voters attribute electricity price increases to AI data centers. This belief is consistent across all regions, even in areas like the Northeast where data center growth is minimal, indicating a significant disconnect between public perception and regional reality.

The public appetite for surprising, "Freakonomics-style" insights creates a powerful incentive for researchers to generate headline-grabbing findings. This pressure can lead to data manipulation and shoddy science, contributing to the replication crisis in social sciences as researchers chase fame and book deals.

The AI debate is becoming polarized as influencers and politicians present subjective beliefs with high conviction, treating them as non-negotiable facts. This hinders balanced, logic-based conversations. It is crucial to distinguish testable beliefs from objective truths to foster productive dialogue about AI's future.

A two-step analytical method to vet information: First, distinguish objective (multi-source, verifiable) facts from subjective (opinion-based) claims. Second, assess claims on a matrix of probability and source reliability. A low-reliability source making an improbable claim, like many conspiracy theories, should be considered highly unlikely.