AI adoption isn't linear. A small, 1% improvement in model capability can be the critical step that clears a usability hurdle, transforming a "toy" into a production-ready tool. This creates sudden, discontinuous leaps in market adoption that are hard to predict from capability trend lines alone.

Related Insights

The future of AI is hard to predict because increasing a model's scale often produces 'emergent properties'—new capabilities that were not designed or anticipated. This means even experts are often surprised by what new, larger models can do, making the development path non-linear.

Despite access to state-of-the-art models, most ChatGPT users defaulted to older versions. The cognitive load of using a "model picker" and uncertainty about speed/quality trade-offs were bigger barriers than price. Automating this choice is key to driving mass adoption of advanced AI reasoning.

Users frequently write off an AI's ability to perform a task after a single failure. However, with models improving dramatically every few months, what was impossible yesterday may be trivial today. This "capability blindness" prevents users from unlocking new value.

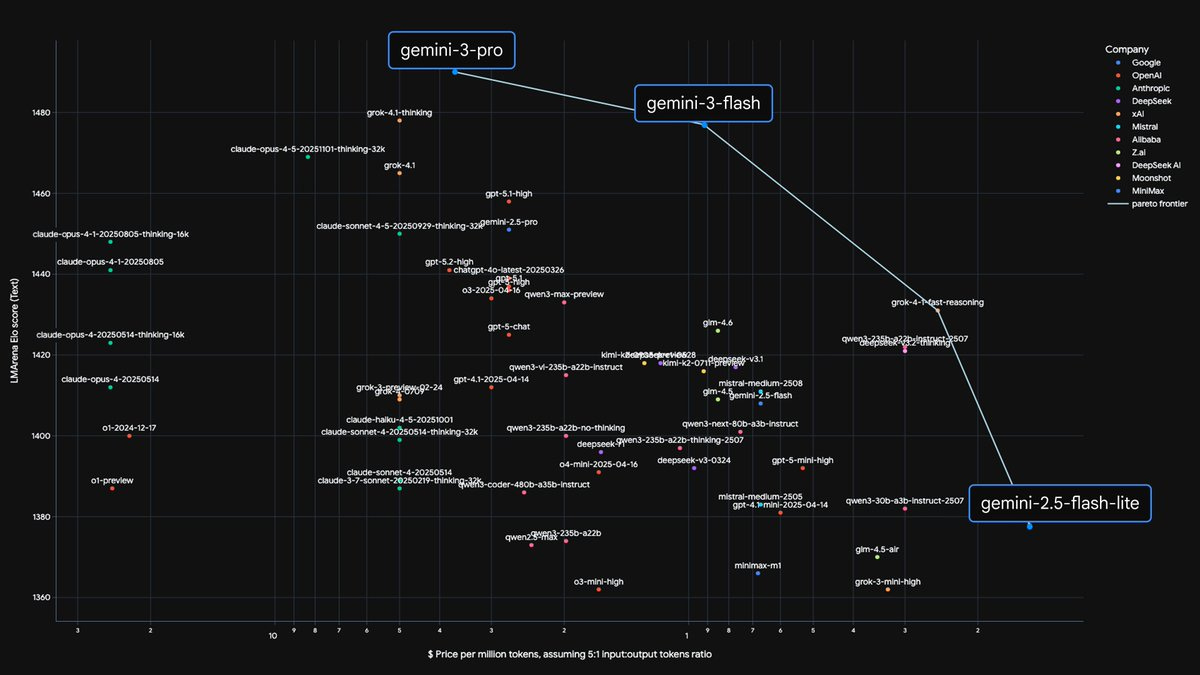

Data from RAMP indicates enterprise AI adoption has stalled at 45%, with 55% of businesses not paying for AI. This suggests that simply making models smarter isn't driving growth. The next adoption wave requires AI to become more practically useful and demonstrate clear business value, rather than just offering incremental intelligence gains.

Anthropic's Cowork isn't a technological leap over Claude Code; it's a UI and marketing shift. This demonstrates that the primary barrier to mass AI adoption isn't model power, but productization. An intuitive UI is critical to unlock powerful tools for the 99% of users who won't use a command line.

The slow adoption of AI isn't due to a natural 'diffusion lag' but is evidence that models still lack core competencies for broad economic value. If AI were as capable as skilled humans, it would integrate into businesses almost instantly.

Ramp's AI index shows paid AI adoption among businesses has stalled. This indicates the initial wave of adoption driven by model capability leaps has passed. Future growth will depend less on raw model improvements and more on clear, high-ROI use cases for the mainstream market.

The true measure of a new AI model's power isn't just improved benchmarks, but a qualitative shift in fluency that makes using previous versions feel "painful." This experiential gap, where the old model suddenly feels worse at everything, is the real indicator of a breakthrough.

AI's "capability overhang" is massive. Models are already powerful enough for huge productivity gains, but enterprises will take 3-5 years to adopt them widely. The bottleneck is the immense difficulty of integrating AI into complex workflows that span dozens of legacy systems.

Don't assume that a "good enough" cheap model will satisfy all future needs. Jeff Dean argues that as AI models become more capable, users' expectations and the complexity of their requests grow in tandem. This creates a perpetual need for pushing the performance frontier, as today's complex tasks become tomorrow's standard expectations.