When an AI updates an application, it could accidentally drop data. The Motoko framework on the Internet Computer provides a "guardrail" by checking that the migration logic touches every piece of data, rejecting the update if data loss is possible.

Related Insights

While AI can generate code, the stakes on blockchain are too high for bugs, as they lead to direct financial loss. The solution is formal verification, using mathematical proofs to guarantee smart contract correctness. This provides a safety net, enabling users and AI to confidently build and interact with financial applications.

Unlike traditional clouds, the Internet Computer protocol is designed to make applications inherently secure and resilient, eliminating the need for typical cybersecurity measures like firewalls or anti-malware software.

Vercel is building infrastructure based on a threat model where developers cannot be trusted to handle security correctly. By extracting critical functions like authentication and data access from the application code, the platform can enforce security regardless of the quality or origin (human or AI) of the app's code.

Unlike traditional SaaS, AI applications have a unique vulnerability: a step-function improvement in an underlying model could render an app's entire workflow obsolete. What seems defensible today could become a native model feature tomorrow (the 'Jasper' risk).

Unlike traditional software where a bug can be patched with high certainty, fixing a vulnerability in an AI system is unreliable. The underlying problem often persists because the AI's neural network—its 'brain'—remains susceptible to being tricked in novel ways.

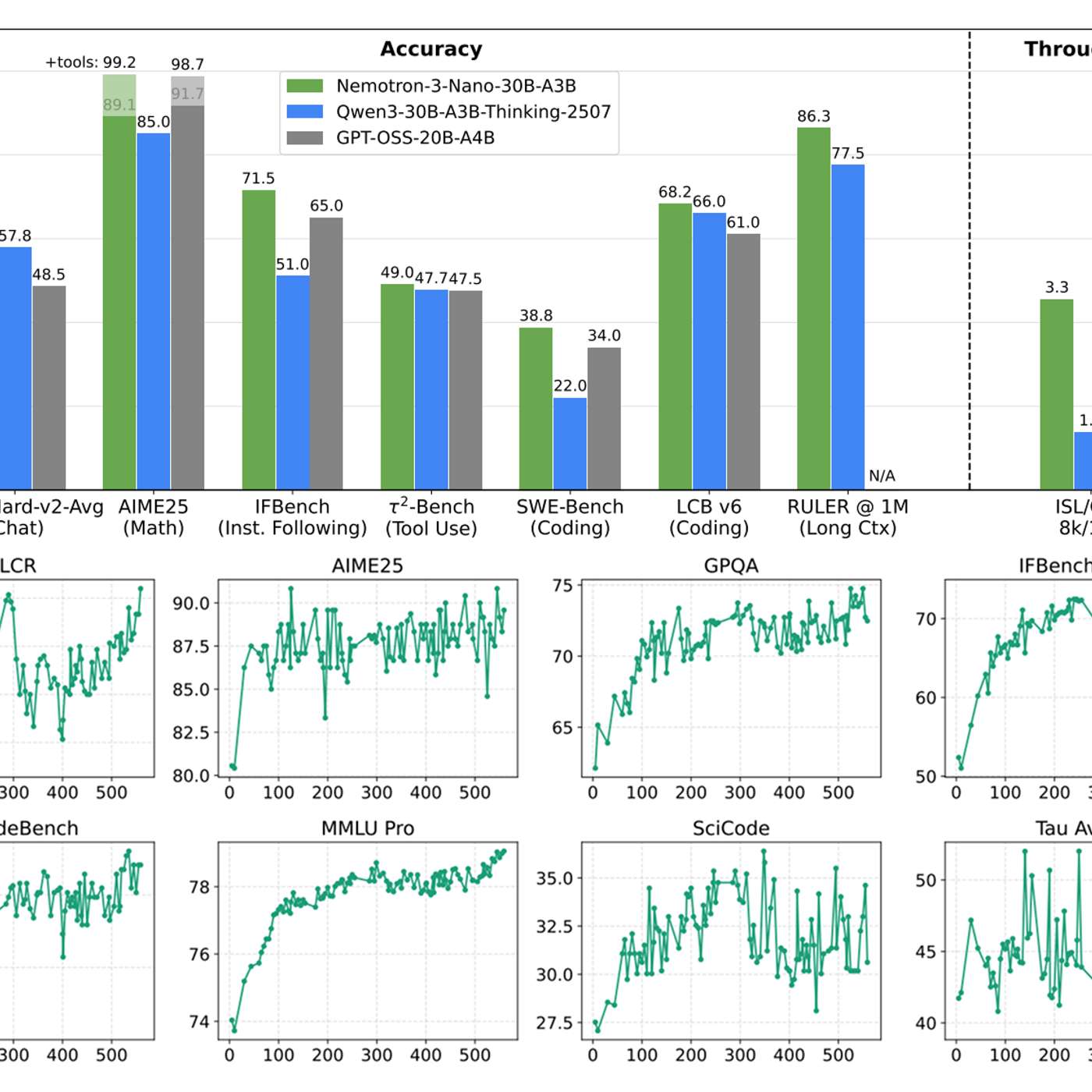

While content moderation models are common, true production-grade AI safety requires more. The most valuable asset is not another model, but comprehensive datasets of multi-step agent failures. NVIDIA's release of 11,000 labeled traces of 'sideways' workflows provides the critical data needed to build robust evaluation harnesses and fine-tune truly effective safety layers.

The system replicates computing across nodes protected by a mathematical protocol. This ensures applications remain secure and functional even if malicious actors gain control of some underlying hardware.

Simply providing data to an AI isn't enough; enterprises need 'trusted context.' This means data enriched with governance, lineage, consent management, and business rule enforcement. This ensures AI actions are not just relevant but also compliant, secure, and aligned with business policies.

When deploying AI for critical functions like pricing, operational safety is more important than algorithmic elegance. The ability to instantly roll back a model's decisions is the most crucial safety net. This makes a simpler, fully reversible system less risky and more valuable than a complex one that cannot be quickly controlled.

A comprehensive AI safety strategy mirrors modern cybersecurity, requiring multiple layers of protection. This includes external guardrails, static checks, and internal model instrumentation, which can be combined with system-level data (e.g., a user's refund history) to create complex, robust security rules.