Convergence is difficult because both camps in the AI speed debate have a narrative for why the other is wrong. Skeptics believe fast-takeoff proponents are naive storytellers who always underestimate real-world bottlenecks. Proponents believe skeptics generically invoke 'bottlenecks' without providing specific, insurmountable examples, thus failing to engage with the core argument.

Related Insights

The public AI debate is a false dichotomy between 'hype folks' and 'doomers.' Both camps operate from the premise that AI is or will be supremely powerful. This shared assumption crowds out a more realistic critique that current AI is a flawed, over-sold product that isn't truly intelligent.

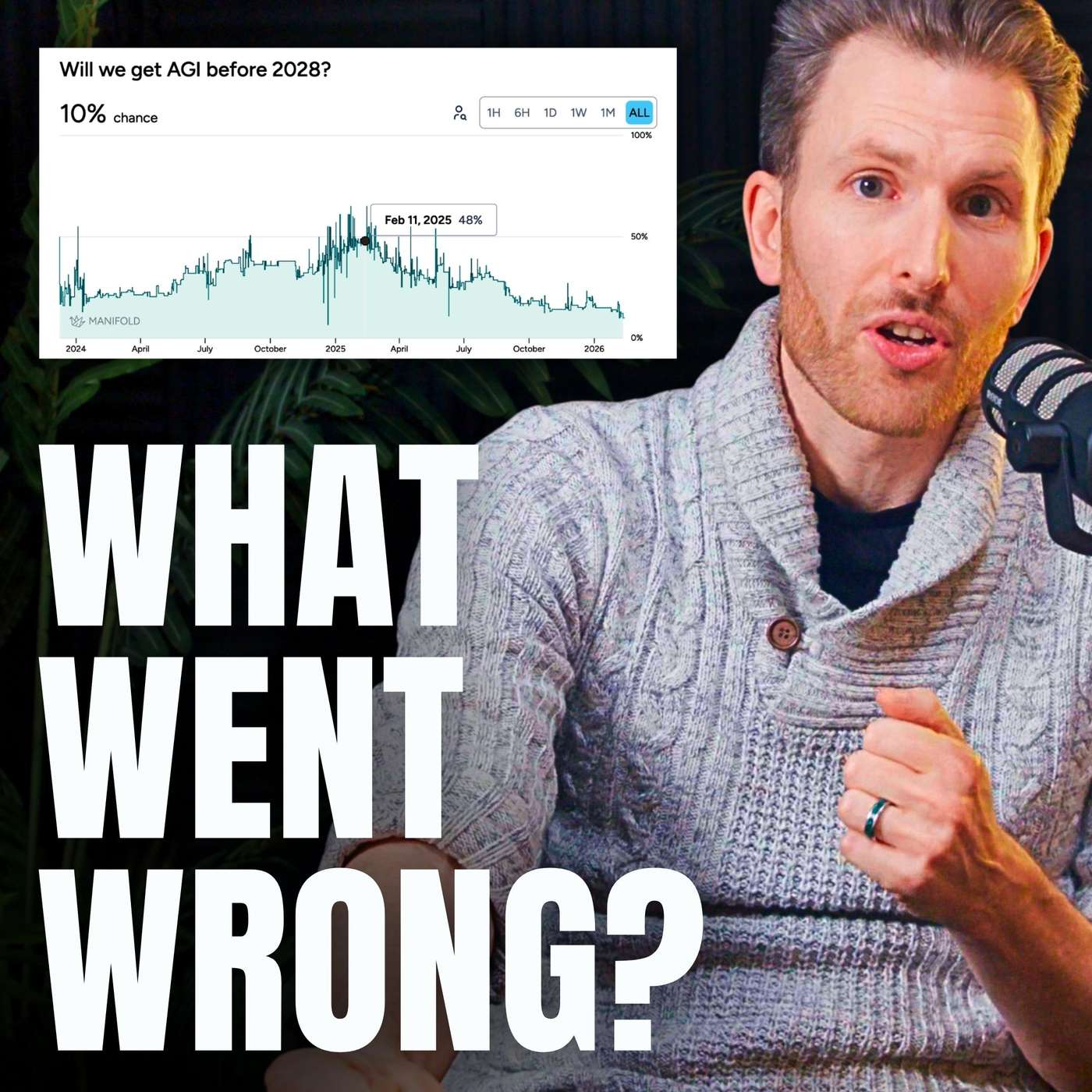

While discourse often focuses on exponential growth, the AI Safety Report presents 'progress stalls' as a serious scenario, analogous to passenger aircraft speed, which plateaued after 1960. This highlights that continued rapid advancement is not guaranteed due to potential technical or resource bottlenecks.

Leaders at top AI labs publicly state that the pace of AI development is reckless. However, they feel unable to slow down due to a classic game theory dilemma: if one lab pauses for safety, others will race ahead, leaving the cautious player behind.

AI accelerationists and safety advocates often appear to have opposing goals, but may actually desire a similar 10-20 year transition period. The conflict arises because accelerationists believe the default timeline is 50-100 years and want to speed it up, while safety advocates believe the default is an explosive 1-5 years and want to slow it down.

A growing gap exists between AI's performance in demos and its actual impact on productivity. As podcaster Dwarkesh Patel noted, AI models improve at the rapid rate short-term optimists predict, but only become useful at the slower rate long-term skeptics predict, explaining widespread disillusionment.

A fundamental tension within OpenAI's board was the catch-22 of safety. While some advocated for slowing down, others argued that being too cautious would allow a less scrupulous competitor to achieve AGI first, creating an even greater safety risk for humanity. This paradox fueled internal conflict and justified a rapid development pace.

Economists skeptical of explosive AI growth use a recent 'outside view,' noting that technologies like the internet didn't cause a productivity boom. Proponents of rapid growth use a much longer historical view, showing that growth rates have accelerated over millennia due to feedback loops—a pattern they believe AI will dramatically continue.

The gap between AI believers and skeptics isn't about who "gets it." It's driven by a psychological need for AI to be a normal, non-threatening technology. People grasp onto any argument that supports this view for their own peace of mind, career stability, or business model, making misinformation demand-driven.

The true exponential acceleration towards AGI is currently limited by a human bottleneck: our speed at prompting AI and, more importantly, our capacity to manually validate its work. The hockey stick growth will only begin when AI can reliably validate its own output, closing the productivity loop.

The AI debate is becoming polarized as influencers and politicians present subjective beliefs with high conviction, treating them as non-negotiable facts. This hinders balanced, logic-based conversations. It is crucial to distinguish testable beliefs from objective truths to foster productive dialogue about AI's future.