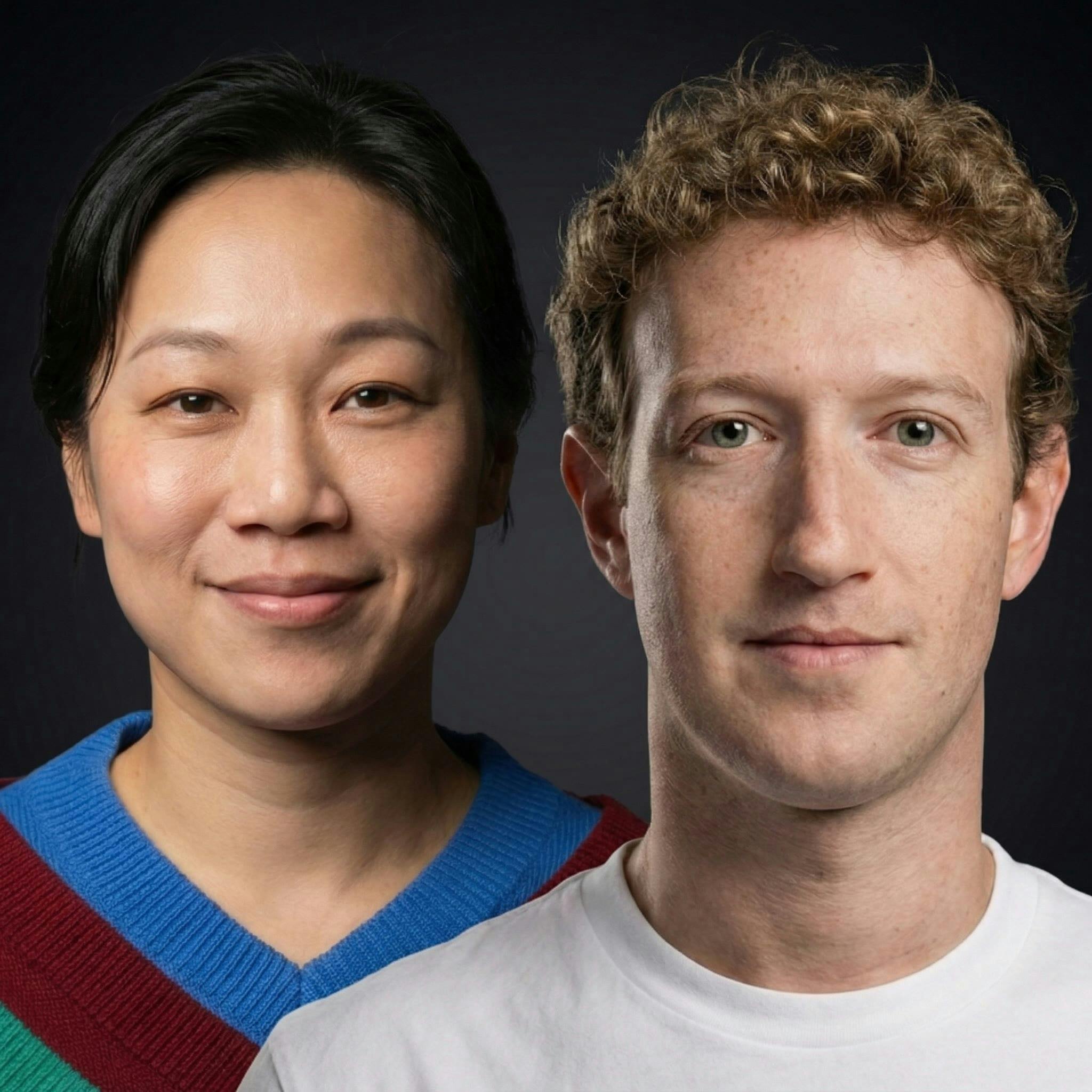

Instead of generating data for human analysis, Mark Zuckerberg advocates a new approach: scientists should prioritize creating novel tools and experiments specifically to generate data that will train and improve AI models. The goal shifts from direct human insight to creating smarter AI that makes novel discoveries.

Related Insights

Wet lab experiments are slow and expensive, forcing scientists to pursue safer, incremental hypotheses. AI models can computationally test riskier, 'home run' ideas before committing lab resources. This de-risking makes scientists less hesitant to explore breakthrough concepts that could accelerate the field.

Google is moving beyond AI as a mere analysis tool. The concept of an 'AI co-scientist' envisions AI as an active partner that helps sift through information, generate novel hypotheses, and outline ways to test them. This reframes the human-AI collaboration to fundamentally accelerate the scientific method itself.

Scientists constrained by limited grant funding often avoid risky but groundbreaking hypotheses. AI can change this by computationally generating and testing high-risk ideas, de-risking them enough for scientists to confidently pursue ambitious "home runs" that could transform their fields.

The next leap in biotech moves beyond applying AI to existing data. CZI pioneers a model where 'frontier biology' and 'frontier AI' are developed in tandem. Experiments are now designed specifically to generate novel data that will ground and improve future AI models, creating a virtuous feedback loop.

The future of valuable AI lies not in models trained on the abundant public internet, but in those built on scarce, proprietary data. For fields like robotics and biology, this data doesn't exist to be scraped; it must be actively created, making the data generation process itself the key competitive moat.

The ultimate goal isn't just modeling specific systems (like protein folding), but automating the entire scientific method. This involves AI generating hypotheses, choosing experiments, analyzing results, and updating a 'world model' of a domain, creating a continuous loop of discovery.

Contrary to the idea that AI will make physical experiments obsolete, its real power is predictive. AI can virtually iterate through many potential experiments to identify which ones are most likely to succeed, thus optimizing resource allocation and drastically reducing failure rates in the lab.

Current LLMs fail at science because they lack the ability to iterate. True scientific inquiry is a loop: form a hypothesis, conduct an experiment, analyze the result (even if incorrect), and refine. AI needs this same iterative capability with the real world to make genuine discoveries.

Dr. Fei-Fei Li realized AI was stagnating not from flawed algorithms, but a missed scientific hypothesis. The breakthrough insight behind ImageNet was that creating a massive, high-quality dataset was the fundamental problem to solve, shifting the paradigm from being model-centric to data-centric.

The founder of AI and robotics firm Medra argues that scientific progress is not limited by a lack of ideas or AI-generated hypotheses. Instead, the critical constraint is the physical capacity to test these ideas and generate high-quality data to train better AI models.