To fully express intent, AI applications cannot rely on a single modality. They need structured code for control flow, natural language for defining fuzzy tasks (like in DSPy's signatures), and example data for optimization and capturing long-tail behavior.

Related Insights

As models become more powerful, the primary challenge shifts from improving capabilities to creating better ways for humans to specify what they want. Natural language is too ambiguous and code too rigid, creating a need for a new abstraction layer for intent.

Instead of asking an AI to directly build something, the more effective approach is to instruct it on *how* to solve the problem: gather references, identify best-in-class libraries, and create a framework before implementation. This means working one level of abstraction higher than the code itself.

The early focus on crafting the perfect prompt is obsolete. Sophisticated AI interaction is now about 'context engineering': architecting the entire environment by providing models with the right tools, data, and retrieval mechanisms to guide their reasoning process effectively.

The effectiveness of an AI system isn't solely dependent on the model's sophistication. It's a collaboration between high-quality training data, the model itself, and the contextual understanding of how to apply both to solve a real-world problem. Neglecting data or context leads to poor outcomes.

With autonomous AI coding loops, the most leveraged human activity is no longer writing code but meticulously crafting the initial Product Requirements Document (PRD) and user stories. Spending significant upfront time defining the 'what' and 'why' ensures the AI has a perfect blueprint, as the 'garbage-in, garbage-out' principle still applies.

DSPy introduces a higher-level abstraction for programming LLMs, analogous to the jump from Assembly to C. It lets developers define program logic and intent, which is then "compiled" into optimal prompts, ensuring portability and maintainability across different models.

DSPy's architecture mirrors human thought by providing an imperative structure (standard Python code) for overall program flow. It then isolates ambiguity into declarative "signatures," which define fuzzy tasks for the LLM to execute at the program's leaves, offering the best of both paradigms.

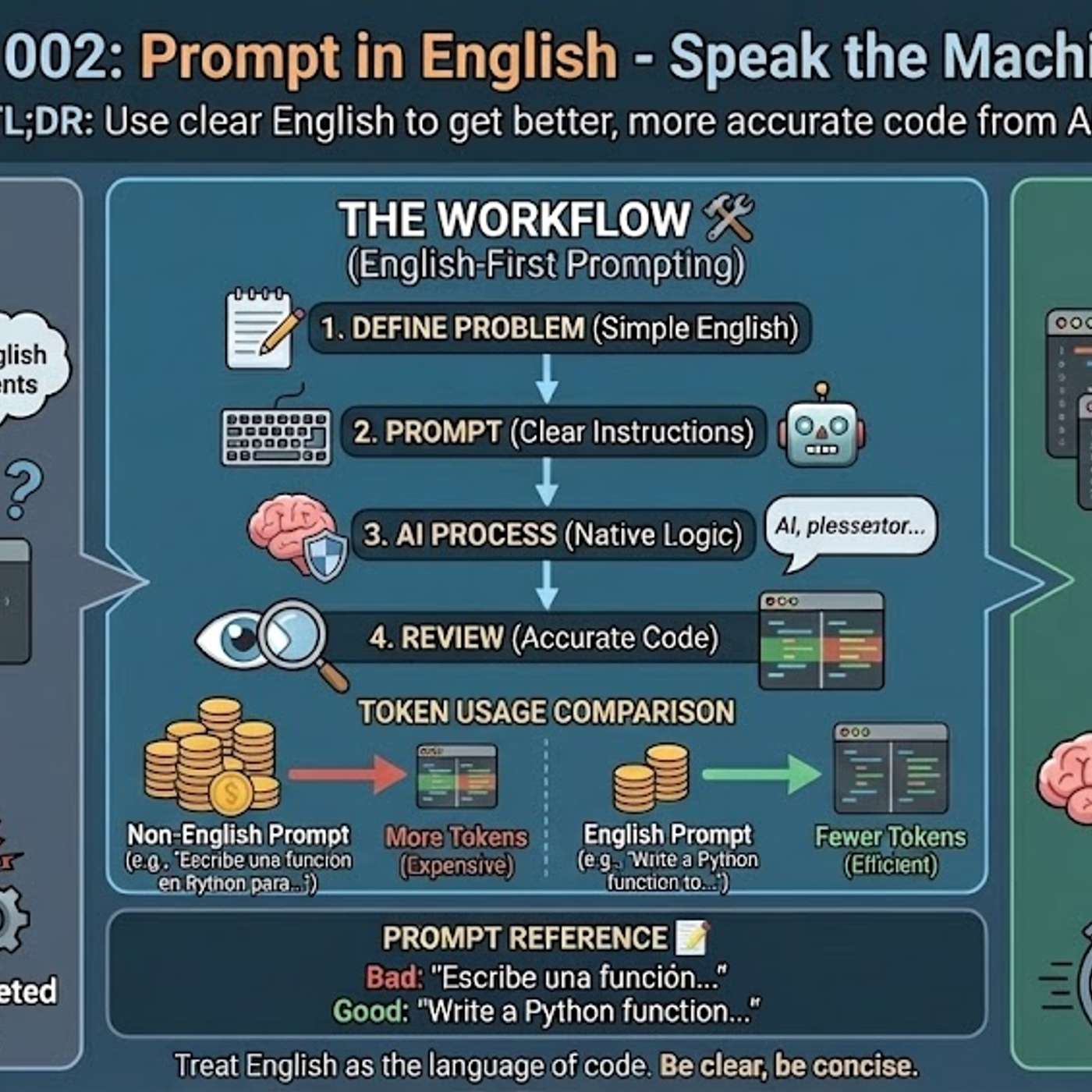

The primary reason AI models generate better code from English prompts is their training data composition. Over 90% of AI training sets, along with most technical libraries and documentation, are in English. This means the models' core reasoning pathways for code-related tasks are fundamentally optimized for English.

AI development has evolved to where models can be directed using human-like language. Instead of complex prompt engineering or fine-tuning, developers can provide instructions, documentation, and context in plain English to guide the AI's behavior, democratizing access to sophisticated outcomes.

To effectively interact with the world and use a computer, an AI is most powerful when it can write code. OpenAI's thesis is that even agents for non-technical users will be "coding agents" under the hood, as code is the most robust and versatile way for AI to perform tasks.