At a massive scale, chip design economics flip. For a $1B training run, the potential efficiency savings on compute and inference can far exceed the ~$200M cost to develop a custom ASIC for that specific task. The bottleneck becomes chip production timelines, not money.

Related Insights

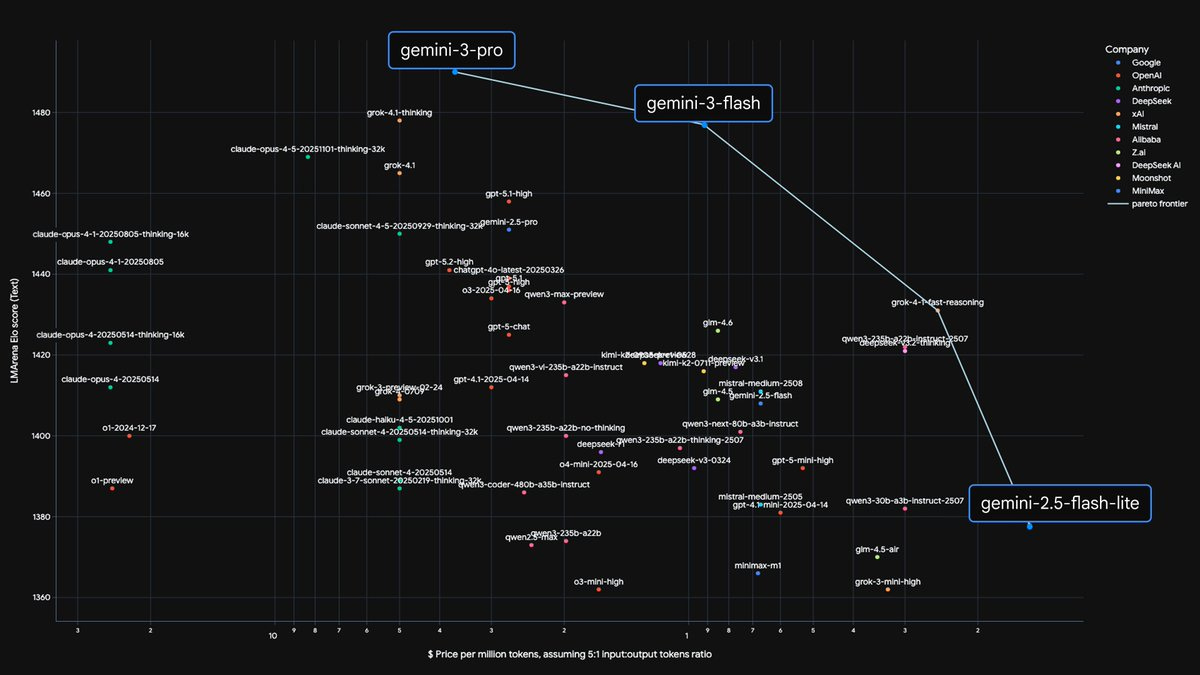

Contrary to the narrative of burning cash, major AI labs are likely highly profitable on the marginal cost of inference. Their massive reported losses stem from huge capital expenditures on training runs and R&D. This financial structure is more akin to an industrial manufacturer than a traditional software company, with high upfront costs and profitable unit economics.

Tech giants often initiate custom chip projects not with the primary goal of mass deployment, but to create negotiating power against incumbents like NVIDIA. The threat of a viable alternative is enough to secure better pricing and allocation, making the R&D cost a strategic investment.

Designing custom AI hardware is a long-term bet. Google's TPU team co-designs chips with ML researchers to anticipate future needs. They aim to build hardware for the models that will be prominent 2-6 years from now, sometimes embedding speculative features that could provide massive speedups if research trends evolve as predicted.

For a hyperscaler, the main benefit of designing a custom AI chip isn't necessarily superior performance, but gaining control. It allows them to escape the supply allocations dictated by NVIDIA and chart their own course, even if their chip is slightly less performant or more expensive to deploy.

Model architecture decisions directly impact inference performance. AI company Zyphra pre-selects target hardware and then chooses model parameters—such as a hidden dimension with many powers of two—to align with how GPUs split up workloads, maximizing efficiency from day one.

True co-design between AI models and chips is currently impossible due to an "asymmetric design cycle." AI models evolve much faster than chips can be designed. By using AI to drastically speed up chip design, it becomes possible to create a virtuous cycle of co-evolution.

The trend toward specialized AI models is driven by economics, not just performance. A single, monolithic model trained to be an expert in everything would be massive and prohibitively expensive to run continuously for a specific task. Specialization keeps models smaller and more cost-effective for scaled deployment.

While training has been the focus, user experience and revenue happen at inference. OpenAI's massive deal with chip startup Cerebrus is for faster inference, showing that response time is a critical competitive vector that determines if AI becomes utility infrastructure or remains a novelty.

For a $1B training run, the subsequent inference costs will exceed $1B. A custom ASIC could save over 20% ($200M+), which is enough to fund the chip's tape-out. This shifts the hardware bottleneck from manufacturing cost to development timeline.

The current 2-3 year chip design cycle is a major bottleneck for AI progress, as hardware is always chasing outdated software needs. By using AI to slash this timeline, companies can enable a massive expansion of custom chips, optimizing performance for many at-scale software workloads.